“With great power comes great responsibility“

– Many (From the French Revolution…to Uncle Ben, in Spiderman)

Following the 30 Day AI Challenge and my high level recap on impacts (where I clarify that I’m mostly excited about the positive impacts of AI, but that we do need to take a quicker, more measured approach to managing the risks), I wanted to dig a little deeper on the hidden societal negatives and how we get ahead of them through quicker alignment, regulation and dialogue.

There’s a fair bit of source material out there, but nothing better than The A.I. Dilemma, a talk given in early March 2023 by Tristan Harris and Aza Raskin, who discuss how existing AI capabilities already pose a grave threat to society. If you’re wondering, Tristan Harris was also featured in the acclaimed documentary, The Social Dilemma, which explored how social media companies were ruthlessly optimizing for engagement, resulting in addiction, manipulation and societal polarization. I’ve watched the below 1hr+ talk a couple of times now and I highly recommend a watch:

Here are ten startling insights and considerations from this talk:

1. We’re At A Pivotal Moment In Human History

We’re at a similar moment in history to when nuclear weapons were first created and have a responsibility to step back and think about how we deploy this new, advanced technology. This talk doesn’t dwell on AI bias, loss of jobs to automation, Artificial General Intelligence (God-like AI), etc…but focuses on a more immediate societal risks.

2. The 3 Rules Of Technology May Lead Us To Catastrophe

- When you invent a new technology, you uncover a new class of responsibilities…and it’s not always obvious what those are.

- If the tech confers power, it starts a race.

- If you don’t coordinate, the race ends in tragedy.

3. We Failed At First Contact – Curation AI

Social Media was first contact, with relatively simple “curation” AI, but besides all the good it did (like connect people and give everyone a voice), it also resulted in tremendous harm, driven by engagement (and profit) maximizing algorithms.

4. Second Contact Is Here – Creation AI

The new Generative AI revolution is second contact with “Creation” AI.

AI underwent a major transformation starting in 2017 with the invention of the Transformer model, which allowed different disciplines within machine learning to merge. Previously, distinct fields like computer vision, speech recognition, and image generation worked separately, making incremental improvements in their respective areas. The Transformer model treats everything as language, including images, sound, fMRI data, and DNA. This shift led to rapid advancements, as progress in one area became immediately applicable to others. Consequently, AI’s exponential growth now benefits from the combined efforts of researchers across disciplines, transcending boundaries and modalities.

5. Gollem-Class AIs Have Scary Abilities

Tristan and Aza call “Generative Large Language Multi-Model Models” (GLLMM) – Gollem AIs, much like the inanimate creatures of folklore that suddenly gain their own capacities and previously unintended capabilities.

They go on to show a few compelling examples:

- Text to Image and Videos: DALL-E (and Midjourney, Stable Diffusion, Runway, etc.): transform human language into images and video, but not merely as statistical imitations.

- fMRI translation can reconstruct images or inner monologues from brain activity. Some scary examples at the 18min mark, showing AI interpreting both visuals and thoughts. Original studies here – Reading Brain Visuals, Reading Thoughts

- Wi-Fi radio signals: identify people’s positions in a room using radio signals

- Security vulnerabilities: find and exploit security weaknesses in code

- Voice synthesis: replicate someone’s voice with just 3 seconds of audio

The pair also muse that 2023 is the year where all content verification protocols start breaking down, given AI generated content (deepfakes, voice cloning, etc.). To illustrate just that, here’s a very recent video showing how bank voice verification can easily be tricked by AI generated audio:

Another danger highlighted are “entertainment” apps like TikTok with deepfake filters (see example in the NBC News segment below). They show how realistic these have become and while politicians are currently hung up on where data servers are based (USA vs China), all it would take to cause societal chaos is to make Biden and Trump deepfake filters available to the masses to create a polarized cacophony across the country ahead of the next elections.

They sum up this section impactfully, comparing AI to the nukes of the virtual and symbolic human world, as we decode and synthesize reality through language. Also…2024 may be the last human election.

6. New (Unplanned, Unexplained) Abilities Keep Emerging

- Golem AIs have capabilities experts don’t understand: GPT and other large language models gain abilities at certain sizes, e.g., arithmetic or answering questions in Persian (or Bengali in the case of Google’s model – see the 60 Minutes segment below)

- AI develops a theory of mind, progressing from the level of a 4-year-old to a 9-year-old in a short time. Researchers don’t know what else these models can do or what capabilities they have. On the flip side, given current models have essentially hovered up data (mostly for free) off the internet, versus investing in quality curated datasets and human feedback, they still lack the ability for advanced reasoning and an understanding of how the physical world works. Watch the recent TED talk by Yejin Choi to learn more.

- Golem AIs can make themselves stronger by creating their own training data and faster by optimizing their own code. AI essentially makes stronger AI, leading to a “double exponential curve.”

- Even AI experts struggle to predict the progress of AI and AI is beating tests as fast as we can create them.

7. The Arms Race To Deploy AI Is Already Causing Problems

- Experts struggling to keep up with accelerating AI progress, with new developments happening on a daily basis

- AI developments have critical economic and national security implications

- Exponential growth in AI hard for humans to intuitively grasp as we’re not biologically wired to handle that sort of developmental speed

- AI impact extends beyond chatbots and AI bias, affecting society and security

- Potential for AI-enabled exploitation, scams, and reality collapse (we’re already seeing AI scams in the news)

- AI could become better than humans at persuasion with “Alpha Persuade”, much like Apha Go became better than any known human at the game of Go.

- AI deployment race leading to intimacy competition among companies

- Rapid adoption: GPT took only 2 months to reach 100 million users

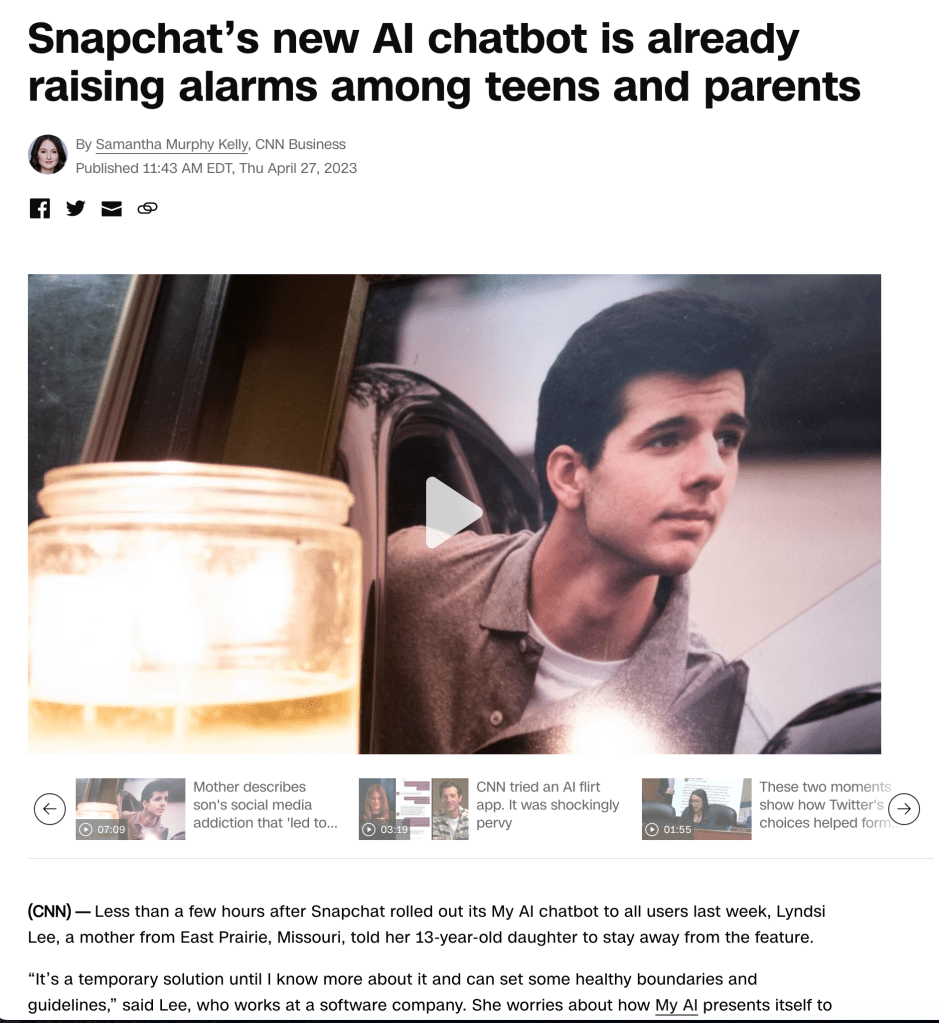

- AI being embedded in widely-used platforms like Windows and Snapchat

- Safety concerns: AI engaging inappropriately with minors (see the example at the 47min mark…or more recent news stories about Snapchat’s new “My AI” chatbot)

- 30-to-1 gap between AI developers and AI safety researchers

- Academia struggling to keep up with AI development in large labs

- 50% of AI researchers believe there’s a 10% chance of human extinction due to uncontrollable AI

8. The US Losing To China Isn’t A Valid Justification

Here’s why Tristan and Aza think so:

- Unregulated AI deployments can actually lead to societal incoherence and weakened democracy

- Chinese government currently considers large language models unsafe (and uncontrollable) and so doesn’t deploy them publicly

- Slowing down public releases could also slow down Chinese advances

- China often fast-follows the US, benefiting from open-source models

- Leaked AI models on the internet (e.g. Meta) can help China catch up

- Recent US export controls effectively slacken China’s progress on advanced AI

- Maintaining research pace while limiting public deployment can help maintain a lead over China

9. We Can Still Choose A Better Future

The presentation proposes a couple of solutions, like Know Your Customer (KYC) and liability for AI creators. With Larger AI developments coming faster than expected, we need to learn from social media mistakes and prevent AI entanglement with society. Technologists, business and world leaders need to uncover responsibilities and create laws for this new tech quickly.

10. How We Get There

Tristan and Aza warn of the Rubber Band or “snapback effect” and gaslighting when discussing AI risks, as it is a difficult topic and we have a tendency to forget about these things when we’re back to our usual routines. We need to acknowledge AI’s potential benefits alongside risks and create a shared frame of reference for AI problems and solutions. The pair have done an excellent job of starting the conversation with this video and also reference how during the nuclear era, a documentary was shown to over a 100 million Americans (and Russians), followed by a dialogue that eventually led to global treaties, limiting nuclear proliferation and avoiding nuclear war. We can do the same with AI. We lost on first contact and are hooked to the social media engagement machine. We can choose to act differently on second contact and avoid falling prey to the intimacy (or persuasion) machine.

Hope you found this summary useful…I highly recommend watching the full video. If you’ve devoured that and want more, here are a couple more thought-proving videos to watch:

Pingback: AI Characters: Cure To Loneliness or Dangerous Intimacy Trap? – Hotel Marketing, Technology and Loyalty

Pingback: AI Characters: Cure To Loneliness or Dangerous Intimacy Trap? – Hotel News Nigeria

Pingback: The Year of AI – 2023 Highlights and 2024 Predictions – Hotel Marketing, Technology and Loyalty